With AIThe demand for GPUs and various AI related products has surged, leading to a sharp rise in HBM prices against the trend. In 2024, HBM remains in a hot state, driving up the stock prices of industry chain companies. Last week, SK Hynix's stock price rose by another 5%, while Samsung rose by 1.5%; Upstream chip equipment manufacturer Tokyo Electronics saw its stock price soar 13%, with a market value of $106 billion, continuing to hit a new historical high; The stock price of Japanese semiconductor equipment company TOWA rose by over 4%.

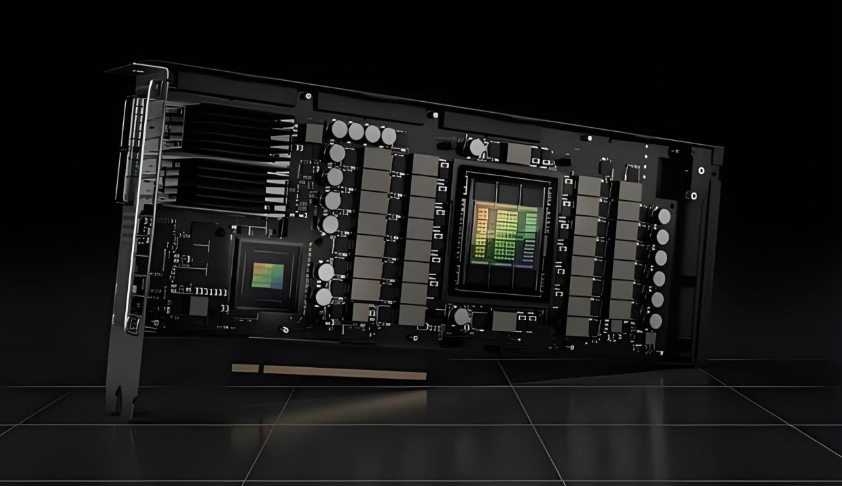

01 | What is HBM? The so-called HBM (High Bandwidth Memory) is a new type of CPU/GPU memory chip (RAM), which actually stacks many DDR chips together and packages them together with the GPU to achieve a high-capacity, high-order wide DDR combination array. In other words, HBM is like a highway, connecting various parts of the chip, allowing data to enter and exit faster. Imagine if the original memory was like a single lane path, although it could pass, the speed was very slow. And HBM is like a wide eight lane highway, allowing data to reach its destination faster. HBM forms a wide superhighway by stacking multiple storage chips together, like connecting small paths together. This can not only significantly improve data processing speed, but also increase memory capacity, just like replacing a small house with a large villa, which can accommodate more data. So, HBM is a revolutionary technology that can significantly improve the performance of chips and provide stronger support for various applications. Overall, HBM, as a high-performance memory solution, provides strong support for the development of artificial intelligence technology by providing high bandwidth, low latency, and parallel transmission characteristics. It enables artificial intelligence models to train and reason faster, thereby promoting the widespread application of artificial intelligence technology in various fields.

02 | HBM prices skyrocketed against the market by 500%. In 2023, there was a surge in demand for AIGPUs and various AI related products, resulting in an average HBM selling price (ASP) skyrocketing against the market by 500%. Currently, the AI server GPU market is mainly dominated by NVIDIAH100, A100, A800, as well as AMDMI250 and MI250X series, all of which are equipped with HBM. The cost of HBM ranks third in the cost of AI servers, accounting for about 9%, and the average selling price of single machine ASP is as high as $18000. According to market research firm Omnia, HBM is expected to occupy over 18% of the DRAM market this year, up from 9% last year. "At present, there is no other storage chip that can replace HBM in the field of AI computing systems," said an industry insider. According to market research firm Yole Group's data on February 8th, the average selling price of HBM chips this year is five times that of traditional DRAM memory chips. The institution also stated that due to the dual impact of production expansion difficulty and demand surge, the annual compound growth rate of HBM supply will reach 45% from 2023 to 2028. Considering the difficulty of expansion, HBM prices are expected to remain high for a considerable period of time. In 2013, SK Hynix collaborated with AMD to develop the world's first HBM. At present, Samsung and SK Hynix dominate the HBM memory manufacturing field, with SK Hynix accounting for about 50% of the HBM market share, Samsung accounting for 40%, and Micron accounting for less than 10%. For a long time, SK Hynix was the exclusive supplier of NVIDIA HBM. In 2023, SK Hynix basically monopolized the supply of HBM3, and this year its orders for HBM3 and HBM3E have also been sold out. As a major supplier of NVIDIA HBM, SK Hynix's stock price has surged by over 60% in the past year.

03 | Many countries are actively expanding. Currently, major chip process manufacturers are actively laying out. TSMC is vigorously expanding its SolC system integration single chip production capacity, and is actively pursuing orders from equipment factories. It is expected that the monthly production capacity by the end of this year will be about 1900 pieces, and it will reach over 3000 pieces next year. There is a chance to increase it to over 7000 pieces by 2027. It is worth noting that TSMC SolC is the industry's first high-density 3D small chip stacking technology, and the company's expansion of production is mainly aimed at addressing the strong demand in fields such as AI. In addition, according to reports, SK Hynix has started recruiting designers for logic chips (such as CPUs and GPUs) and plans to integrate HBM4 directly onto the chip through 3D stacking. SK Hynix is currently discussing this new integration approach with multiple semiconductor companies such as Nvidia. According to SK Hynix's forecast, the HBM market will experience a compound annual growth rate of 82% by 2027. As of 2022, the HBM market is mainly occupied by the top three global DRAM manufacturers, with Hynix HBM having a market share of 50%, Samsung having a market share of about 40%, and Micron having a market share of about 10%. At the same time, domestic manufacturers are mainly in the upstream equipment and material supply chain of HBM. Among them, the investor relations activity records of Huahai Chengke in August 2023 show that the company's granular epoxy molding material (GMC) can be used for HBM packaging. The related products have been verified by customers and are currently in the sample delivery stage. As of November 20th, the company's stock price has doubled since November. In addition, some customers of Lianrui New Materials are globally renowned GMC suppliers who need to use granular packaging materials to improve packaging height and meet heat dissipation needs. According to Hynix's public disclosure, ball silicon and ball aluminum are key materials for upgrading HBM. And Lianrui New Materials has also started supporting the production of spherical silicon and spherical aluminum used in HBM. Therefore, although the market for HBM is currently dominated by overseas giants. But with the emergence of ChatGPT's AI big model, technology giants such as Baidu, Alibaba, iFlytek, Shangtang, Huawei, etc. are all training their own AI big models, and the demand for AI servers has surged significantly. This also means that the independent and controllable storage of chips is still an important direction for the current industrial development. So in the future, the localization of HBM will also become a major development trend.

04 Jingtai Viewpoint | Although HBM is good, the domestic industry still needs to approach it calmly. Currently, HBM is still in a relatively early stage, and its future still has a long way to go. However, it is foreseeable that with the rapid development of the artificial intelligence industry and frequent iterations of related technologies, the complexity of memory product design is bound to increase rapidly, and higher bandwidth requirements will be put forward. The continuously increasing demand for broadband will continue to drive the development of HBM. For the development of the secondary market, with the continuous advancement of AI large model training, HBM, as a key technology, has extremely high growth certainty, and related investment opportunities are expected to focus on packaging equipment materials and domestic HBM supporting supply